Running Docker commands inside Docker containers (on Windows)

Background

Recently I’ve been doing a lot of DevOps. We’re building a Sitecore solution with all the usual suspects involved (CM, CD, xConnect, Rendering Host, SXA, MSSQL, and so on) and for lack of a better expression, we’re doing it “containers-first”. Locally we’re using Docker Desktop and upstream we’ll likely be deploying our containers to K8s or some such. I say “likely” because it hasn’t really been decided yet and isn’t relevant to this post.

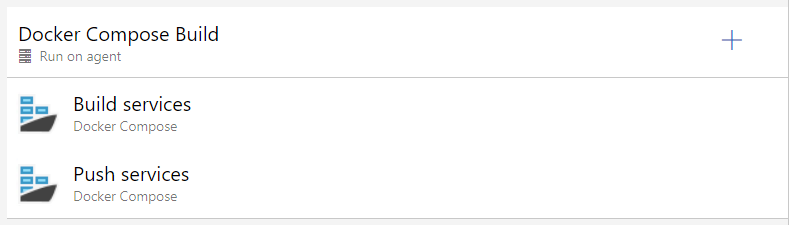

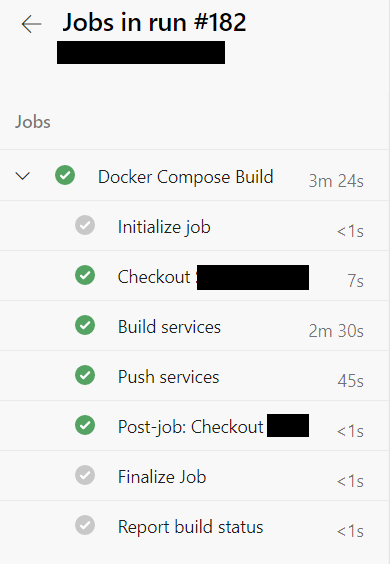

For source control we’re using Azure DevOps. We also use the build and release pipelines for our Continuous Integration and other related services. In our setup, currently, “build services” is really quite simple. “Build & Deploy” can be (more or less) described as “docker-compose build” followed by “docker-compose push”.

Very simple to set up locally and in fact very simple to set up in an ADO pipeline as well.

Locally we run a docker-compose build before docker-compose up. This works out fine, Docker’s cache layers work out fine and only the parts of our containers that have actually changed will have to go through the building process.

1 | Building nodejs |

The key thing to notice here is the repeated ---> Using cache. Docker recognises that nothing has actually changed and therefore skips the build step. The whole process for our 15 or so images takes less than 20 seconds to complete if there’s no actual steps needed.

So far so good.

Repeating the build process on a Hosted Agent in Azure

When I initially set this up in ADO I just went with a build pipeline that I guess most would just pick by default. I used a default agent from the Azure Hosted Agents pool. And this is all you need, really. I set it up, I ran it, it completed just fine. All was well, or so I thought, but then something happened.

I ran it again.

Now if you’re not aware, Sitecore bases many of its images off Windows servercore which is a fairly big image. We also have 3 images in our setup that each require their own Node installation so several NPM CI calls are happening during a full build. Building the entire set of containers on a clean machine, including downloads of gigabytes of images, takes a good half hour or so on a decent machine.

On the default Azure Hosted Agent it took from about 1 to 1.5 hours. Every. Single. Time.

And in hindsight this was obvious. The reason is caches. Or rather, the lack of caches.

Every VSTS Build Agent is essentially a fresh VM that is fired up to execute your pipeline. It comes with a set of build tooling installed. Once it is complete it’s tossed in the bin, never to re-appear anywhere again.

This of course also means, utilising the cache as we saw above? Yea that’s not happening. Every build, all base images have to be downloaded, every single image has to be rebuilt from scratch, every single NPM CI call will have to be made.

Some would maybe just accept this. Not me.

Everything we build in this project will eventually end up being hosted on an on-premise server setup, so I happened to have a perfectly fine (and idle, currently) server available. Time for an on-premise Agent Pool. The server was already configured with basic Docker tooling (Windows Server 2019, not that it matters for this). You could just as easily provision an Azure VM to take the role of the “server” for everything I describe next.

Setting up an On-Premise ADO Agent

Setting up an on-premise agent is surprisingly simple.

- Create a PAT - a Personal Access Token

- Download and configure the agent (essentially you’re provided with a PowerShell script to run on the server)

- Run the agent (optionally configure it as a service)

All of this is described in a number of articles online, I won’t go into more detail here.

- https://docs.microsoft.com/en-us/azure/devops/pipelines/agents/v2-windows?view=azure-devops

- https://docs.microsoft.com/en-us/azure/devops/pipelines/agents/agents?view=azure-devops&tabs=browser

I followed the steps, switched my pipeline to use this newly configured agent, and voila. Building was now back to how I wanted it with build times averaging about 4-5 minutes (after the initial run had been completed).

I then proceeded to configure an additional Agent Pool (for the upcoming Release Pipeline) and that’s where things got a little annoying. The scripts provided are a little fidgety (but workable enough) when wanting to run multiple agents. But more importantly; having our project now rely on some scripts being executed on a server, a service running to be monitored with new tooling, installation documentation. Yea. I wasn’t really having that; everything else in the project was to be a simple, flexible, scalable, deployable set of containers. Why should this be any different?

Setting up a Docker based On-Premise ADO Agent

So I went down a rabbit hole. My goal was to build an image that would be able to run Docker commands (primarily docker-compose build), multiple instances (agents) could be easily spun up, and I would be able to replicate the entire setup in mere minutes should our (not yet stable) servers change or for whatever reason. For me this is sort of the whole point of containers in the first place.

I started here Run a self-hosted agent in Docker. In short, you create a very simple DockerFile and configure the container to run a script. The script is a modified version of the scripts used to configure the on-premise agent (which is not surprising). When built, you fire up the container with something like

docker run --restart always -d -e AZP_AGENT_NAME=DockerBuildAgent01 -e AZP_POOL="On Premise Build Agents" -e AZP_URL=https://dev.azure.com/yourproject/ -e AZP_TOKEN=yourPAT yourproject.azurecr.io/vsts-agent:latest

This took very little time to set up. After a minute or so after running this, the new Agent showed up in my Agent Pool. Magic. I was now running an on-premise ADO Build Agent on my local machine inside a Docker container on my work machine which is in turn a Virtual Machine running off my Windows 10 host PC. Isn’t IT great? ;-)

Anyway. I added some Docker to the mix. With a little help from our friends at Chocolatey, my DockerFile now looked like this:

1 | # escape=` |

My Agent was now Docker-capable.

I fired it up again and ran another build.

Turns out, running Docker inside a Docker container is not so straight forward

Which in hindsight is obvious and I don’t know why I was so overly optimistic going into this at all :D

When my build fired, I was instantly greeted with this rather cryptic error message.

1 | C:\ProgramData\chocolatey\bin\docker-compose.exe -f C:\azp\agent\_work\1\s\docker-compose.yml -f C:\azp\agent\_work\1\s\docker-compose.override.yml -p "Our Project" build --progress=plain |

After a bit of digging it turns out, this here is Docker’s (rather unhelpful, I’d say) way of telling me; “Hey yo, I can’t find the Docker Daemon”. Without getting into too much detail here, the Docker Daemon is what actually runs the show. The Docker tooling - docker.exe, docker-compose.exe and so on - talk to the Daemon whenever they want to actually do something with the images and containers.

And there was no Daemon. On my host PC, the Daemon comes from Docker Desktop. On the Windows Server, it came from the built in Docker services role. Inside my container there was neither.

I was, at this point, still being a bit naiive about it all. It seemed obvious to me that installing Docker Desktop inside the container was probably a pretty stupid idea. I then considered enabling the servercore base image I was using for Containers. Maybe that would have worked (a story for a different day perhaps), but I eventually ended up with a completely different solution. Thanks to Maarten Willebrands for the Rubber Duck assist that eventually led me in the right direction. The answer lies in this SO post; How do you mount the docker socket on Windows?.

I mentioned this above, but I didn’t actually have very much awareness of this at the time. I sort of knew there had to be some kind of API in play, but I’ve never wanted or needed to know much about the inner workings and details of it all. But I’ll try and explain what I learned.

The Docker tools speak to the Daemon via an API. Sometimes referred to as docker.sock or the “Docker Socket”. How this happens on Linux I don’t know, but on Windows this happens over something called “Named Pipes”. So when you install Docker Desktop on your Windows Machine, a Named Pipe is set up. The Docker tooling can then “talk” to the Daemon via this Named Pipe.

And, as it turns out, this Named Pipe can be shared.

Sharing your host Docker Daemon Socket (Named Pipe) with a running Docker container

⚠ DO NOT EVER DO THIS WITH A CONTAINER YOU DON’T KNOW: Giving a running container access to your host operating system is not something you should do unless you absolutely know what that container is going to do with that access. Warnings aside; if you installed an on-premise agent anyway, this isn’t really much different. So don’t worry too much about it.

So following this technique, I fired up my Docker based VSTS Agent once again.

docker run --restart always -d -v "\\.\pipe\docker_engine:\\.\pipe\docker_engine" -e AZP_AGENT_NAME=DockerBuildAgent01 -e AZP_POOL="On Premise Build Agents" -e AZP_URL=https://dev.azure.com/yourproject/ -e AZP_TOKEN=yourPAT yourproject.azurecr.io/vsts-agent:latest

The “magic” here is this part; -v "\\.\pipe\docker_engine:\\.\pipe\docker_engine". Essentially I use a Docker “Volume Mount” to connect the Named Pipe docker_engine to my Docker container. Which now means, when the tooling inside my container tries to find a Docker Daemon they will find it, since I’ve just provided the one from my host PC.

Very Zen!

I fired off another build. And it worked. Profit! :D

TL;DR

Setting up an on-premise Azure Agent to do your Docker builds saves you a lot of time since Docker can use its caches when building. Our 15 or so image build takes on average around 5 minutes - end to end.

Setting up the Agent to run as a Docker container is easier to maintain. Added benefit is, you can run this Agent very easily on your own machine as well for your own hobby projects; e.g. if you’re on the free tier of Azure DevOps.

To make a VSTS Agent that can actually do your Docker builds (e.g. docker-compose build) you need Docker tooling inside the container and you need to connect the agent to your host PCs Docker Daemon.

I’ve created a Gist Setting up a Docker based VSTS Agent that can run Docker commands with a set of example files on how this can be achieved.

Enjoy! :-)