Sitecore Docker for Dummies

Part 3, Deploying and Debugging your Visual Studio Solutions

Now we’re getting some work done.

Special thanks to Per Bering. Without his patience with my stream of questions, this post would never have been completed this quickly.

This is part 3 in a series about Sitecore and Docker.

- Part 1: Docker for Dummies - Docker 101 or Docker basics…

- Part 2: Docker for Dummies - Setting Up Sitecore Docker Images

- Part 3: Docker for Dummies - Deploying and Debugging your Visual Studio Solutions (using Sitecore and Docker)

For this post, I am flat out assuming you know and understand how a Filesystem Publish from Visual Studio works.

Right, strap in. If you’ve followed the first two posts, you’re actually almost there. There are just a few minor tweaks that need to happen, for you to be able to fully enjoy and work with your new Sitecore Docker containers.

I’m going to be covering a lot of ground here, but I promise I’ll keep it For Dummies style. Feel free to dig deeper into any particular area of interest on your own time. The rest of us got stuff to get done ;-)

Deploying (publishing) files to your Docker container

Docker Volumes, the briefest introduction ever

A few things to get us started. The first thing to keep in mind is; that your Docker Container is static. It exists only for as long as you keep it running. Once you take it down (docker-compose down) it is gone and will come up fresh when you fire it up again. This is what I said (with some disclaimer) in previous posts, and this remains true.

But if you’ve played around with some of the examples from the previous posts you also know, this isn’t entirely true. You can create items inside Sitecore, for instance, and they’re still there when you come back. So why is that?

The answer is Docker Volumes. Something I could probably write 10 posts about and not be done; so let’s just get to the TL;DR already shall we? Open up the docker-compose.yml and let’s take a look at that SQL Server Container. It looks like this:

1 | sql: |

As you probably guessed, the section to pay attention to here is the volumes: one. It has one simple volume mapping - translated from Dockeresque, it reads like this:

Docker, please map my local

.\data\sqlfolder toC:\Datainside your container

And Your SQL Server image is configured to mount it’s databases from the C:\Data folder. It really is that simple (it isn’t) in our For Dummies universe. If you’re curious, go look at D:\Git\docker-images\images\9.2.0 rev. 002893\windowsservercore\sitecore-xp-sqldev\Dockerfile and see the mapping. You’re looking for:

DATA_PATH='c:/data/' `

Ok so. The easiest way to think about this, as the mappings being SymLinks (which is not far from being true) between your Container and your native OS filesystem. Unlike a VM where you would need to copy files into it for it to store on it’s local VHD - here we just create a direct connection between the two worlds. Wormhole; but no DS-9. And The Dominion is actually called Daemon.

Configuring volume links to a Windows container, a few caveats and gotchas.

Right so with that out of the way, it is clear that we need to punch another hole into our Container. We need to create a link between our webroot and somewhere on our host OS - so that we can publish our solution to it.

This is actually a little more complicated than it sounds. For reasons I won’t go into here (I don’t know yet lol), you can only create a volume link between the HOST OS and an existing directory inside the container on Linux Docker, not on Windows. Bummer. Fortunately our friends maintaining the docker-images repository got our backs and have created a small PowerShell script that essentially does the following:

- Monitor folder A for changes

- Robocopy these with a brick tonne of parameters to folder B

All we really have to do is fire up that script inside the Container, and the basics are in place for us to get going. Back to the docker-compose.yml file.

Let’s start simple, below image: we do this:

entrypoint: cmd "start /B powershell Watch-Directory C:/src C:/inetpub/sc"

So entrypoint: is us telling Docker; “when you start, please run this”. AutoExec.bat all over again.

Watch-Directoryis the script I mentioned above; it is already baked into your image and Containerc:\srcis a non-existing folder in the container, andc:\inetpub\scis the default location of your webroot inside the Container.

Great. So now all we need is a volume mapping:

- .\deploy:C:\src

And we’re in business. Right? Using this, I should be able to publish my Visual Studio solution to .\deploy, and it will automagically get moved to the webroot.

And sure enough.

Publishing your Visual Studio projects to your Container

Right. This is where you do some work that I don’t even want to write about :P

Set up a blank Visual Studio Solution with a Web project in it, like you would for any Sitecore project. Or grab one of your existing projects. Or whatever. I’m going to skip from this point, straight to publishing - this post is already going to be long enough.

Also, if you’re still messing about with the test containers from D:\docker-images\tests\9.2.0 rev. 002893\windowsservercore - it’s time to move a bit. Move the following:

- data

- .env

- docker-compose.xp.yml

To your solution root. Rename docker-compose.xp.yml to just docker-compose.yml. Make the below modifications to it (CD and CM server entries). Turn off any containers you may have running and from your solution root now run docker-compose up. Voila.

Here’s what your docker-compose.yml should resemble (not touching all the other stuff like Solr and SQL):

1 | cm: |

And CD (we need to deploy there as well):

1 | cd: |

Fortunately, the hard work is done. All you need to do now is make a Publish Profile (or use MSBuild in whatever form you fancy, gulp, Cake, whatever you want) and get your project/solution published to .\deploy.

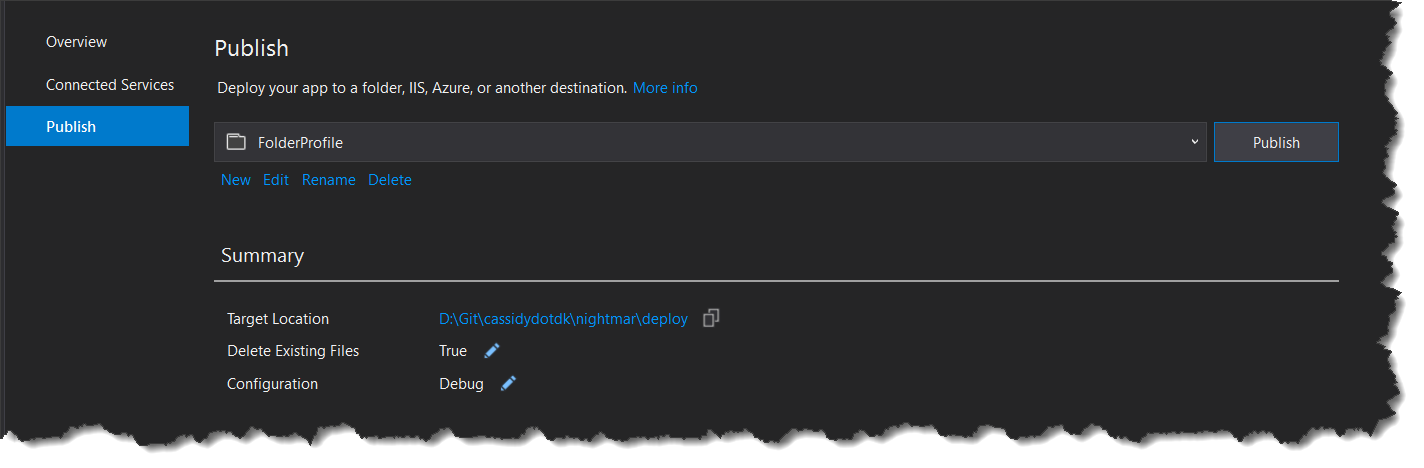

It can look like this:

You’ve seen that before. Nothing fancy here. Run “Publish”. Then pay attention to your Docker output window. You should see something like this:

1 | cm_1 | 23:38:20:575: New File C:\src\app_offline.htm |

The exact contents here will, obviously, vary greatly depending on what it is you’re actually publishing. Suffice it to say, when you see this output, Watch-Directory has done its thing and copied the contents of your .\deploy folder to the webroot inside your container(s).

Simple as.

Sooner or later though, we all mess up. That’s what we have debuggers for.

Debugging your Visual Studio Project inside the Container

So debugging your solution inside a Docker Container is not quite as simple as you’re used to. It’s not as simple as just going Debug -> Attach to process - the Container is running in its own little world of isolation.

We need to punch a hole in, once again. But this time not a filesystem one.

If you’ve ever debugged a remote IIS server, this process is exactly the same. You basically need to install a Visual Studio Remote Debugging Monitor inside the container, that Visual Studio can reach out to for the debug session. This is a lot simpler than it sounds. Let’s grab our entrypoint: once again.

entrypoint: cmd /c "start /B powershell Watch-Directory C:/src C:/inetpub/sc & C:\\remote_debugger\\x64\\msvsmon.exe /noauth /anyuser /silent /nostatus /noclrwarn /nosecuritywarn /nofirewallwarn /nowowwarn /timeout:2147483646"

So I’ve expanded things a bit. I’ve added /c to the cmd so that Watch-Directory won’t hold us up and block things. Then I add a call to C:\remote_debugger\x64\msvsmon.exe and another bucket load of parameters.

C:\remote_debugger doesn’t exist inside the Container. But we know how to solve that.

Modify docker-compose.yml once more, and make it look like this:

For CM:

1 | cm: |

And CD:

1 | cd: |

If you’re not running Visual Studio 2019 Professional, you will need to change that path.

Right, now we have the Remote Debugger running inside the Container. If you want to debug - I guess I shouldn’t have to say this, but I made this mistake myself 😂 - make sure you compile in debug configuration, not release in your Publishing Profile.

Attaching to the Container process is slightly different than normal however. I’ve found various guides on the net that seem to indicate it should be even easier than what I do here - but I couldn’t find any other way. And it’s not too bad actually.

First, find the IP [^1] address of your CM server.

1 | PS D:\Git\cassidydotdk\nightmar> docker container ls |

And find the IP address near the bottom of the inspect output.

1 | "NetworkID": "5ae232363202af17ebd08220d6f09550d262dd23e03482a329d87e602deadd85", |

So 172.22.47.113 here. And then I know that the Visual Studio 2019 debugger listens on port 4024. If you’re running any other version, follow the link.

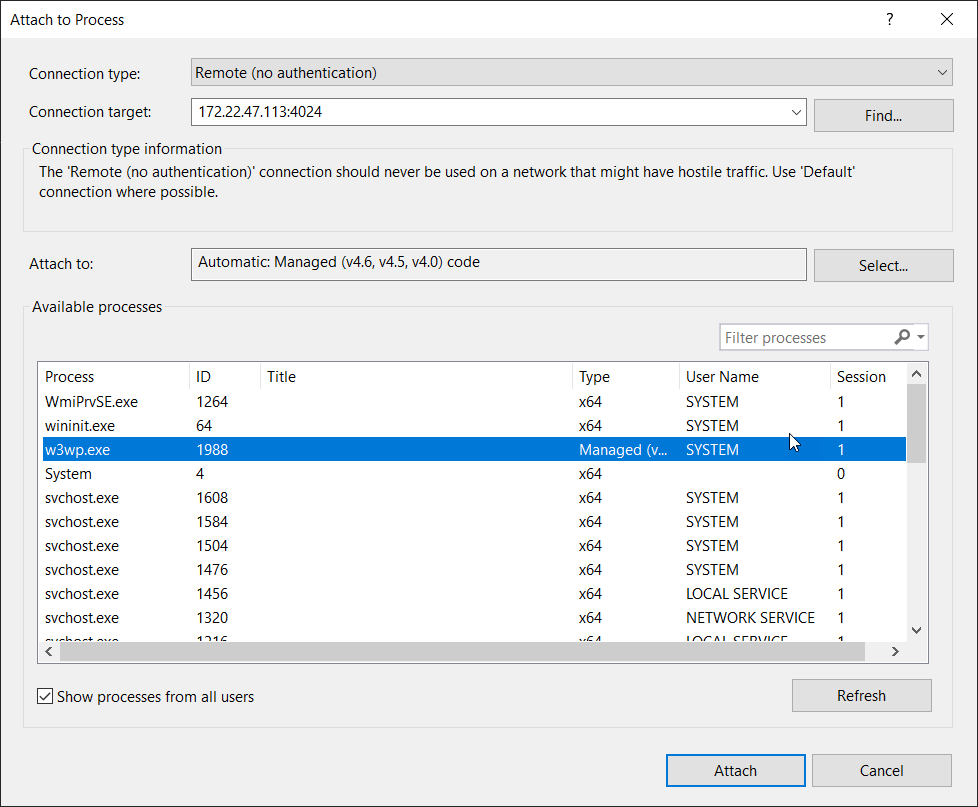

Right, so with this information in hand - 172.22.47.113:4024, it’s time to get debugging. In Visual Studio do Debug => Attach to process (so far, everything as per usual).

Then switch “Connection Type” to Remote (no authentication) and paste the IP address and port into the Connection Target field. Click “Refresh” (bottom right) and you should see something like this:

Neat huh? Am sure you can take it from here.

Summary

AKA when even the For Dummies version becomes a long text.

- Copy/paste the volume links and entrypoint into your

docker-compose.ymlfile. Feel absolutely free to shamelessly steal mine from this post; I copied them from someone else. - Publish your solution to

.\deploy - When you need to debug, attach to the remote instead of local

That’s it. You don’t really need to worry about much more than this. Well except how to set up Unicorn obviously 😎 - I’ll do a side-post about this tomorrow. You actually have enough information in this post to easily do it yourself. Spoiler: map .\unicorn to c:\unicorn, set <patch:attribute name="physicalRootPath">c:\unicorn\$(configurationName)</patch:attribute>, no filesystem watcher required.

[^1]:

If you’re using VSCode, you might have noticed that it comes with some extensions for Docker. If you expand the Docker icon, you can see your running containers. Right click one and go “Attach Shell” for an instant PowerShell command prompt inside the container. Run IPConfig to get it’s IP address. Very simple, and useful for a heap of other things as well.