Fun with Julia and Taichi

Strictly speaking, it’s not Sunday today. What can I say? Guilty as charged, sometimes I have fun on Saturdays as well :-)

So what happened was; I was really going to make a post this weekend about how you can start doing more advanced stuff with Pygame. You see, Pygame is by nature a CPU renderer. As you can probably imagine, this puts a pretty hard limit on just how complex and advanced you can get with it. No doubt you’ve heard about this thing called “Graphics Cards” and GPUs and how they massively help us with complex and real-time visuals. Yea. So Pygame doesn’t do that. At least not yet.

Anyway. The way we can solve that with Pygame - or one way, I should say - is to combine Pygame with modules that work natively with your GPU. You can do a search, there are dozens of them. I generally recommend ModernGL and to be honest, that’s what I was going to write about. I still will, worry not, but… I mean… look. I was sitting around and doing some research in preparation, and then I came across this thing. Taichi. And when it comes down to it I guess I’m 100% like Gollum - “ooooo shiney!”. And here we are.

So today we’ll be looking at ModernGL Taichi. Deal with it. It’ll be fun :-)

So what is Taichi?

Well, according to The Taichi Website:

Taichi is a domain-specific language embedded in Python that helps you easily write portable, high-performance parallel programs.

You can explore the site on your own, I’ll just dot down a few (simplified) highlights of how you can think about it.

- It hides away the inner details of how to achieve fast parallel computing

- Parallel computing; if you’re thinking CUDA cores and GPUs, you’re on the right track

- While we’re using a Python front, behind the scenes Taichi runs high-speed native code to whatever platform you’re on

- The code you create will run on Windows, MacOS, Linux, mobile devices, whatever. Run it on your QNAP for all it cares, though don’t expect a GPU there ;-)

- It’s integrated with other popular frameworks in the Python ecosystem (for machine learning, maths, and so on)

Right. So anyway. When exploring a new framework or a new language, what do we do? We create “Hello World” of course.

And that’s where things got really interesting.

Hello World with Taichi

So when you have a high-performance parallel computing bells and whistles framework you want to introduce, do you really think printing out the letters “hello world” is going to impress anyone? No, I wouldn’t think so.

Right. So how about rendering out a fractal then? Fractals are fun. They’re enjoyable to look at (well… if you’re sufficiently geeky inclined I suppose. Guilty, once again, as charged.), and they can easily put even the best modern computers into a state of frustration due to the computational requirements.

Digress

My first encounter with Julia (and her brother, Mandelbrot) was in the early 90s. I was reading about fractals and was happily chippin’ away in C on my trusted Amiga 500, implementing the formulas and necessary calculations involving Imaginary Numbers without really knowing what they were or how they worked. I still don’t by the way. Rendering out Julia or Mandelbrot in what must have been something like 320x320 pixels took a good solid while. I can’t remember the exact numbers, but probably around 30 minutes or so, at 128 max iterations. If someone had told me back then, that I would be one day rendering it out in real-time at 60 FPS, I’m not at all convinced I would have believed them.

Getting Started with Taichi

First, make sure you have the Taichi module installed. Easily done:

1 | pip install taichi |

Job done. Then fire up VSCode (or… you know… whatever you prefer).

Then let’s import Taichi and initialise it. Instruct Taichi to use GPU architecture (where possible). Make those CUDA cores work for you.

1 | import taichi as ti |

And now, let’s set up the basics for preparing to render the Julia set. We’ll define a few constants for you to play around with later, and create an array to hold our “canvas” or “surface” or “screen buffer” or “drawing area” or whatever term you prefer. A two dimensional array. Taichi uses a rectangle with a 2:1 aspect ratio as their default “Hello World” example; we’ll do the same here.

1 | dim = 768 |

Notice that our “pixels” array will be of the Taichi type “field”. This is because we need the array to be allocated by Taichi in whatever memory space that is most appropriate to the underlying architecture used. Since this is GPU in our case here, the array will be allocated directly on the GPU VRAM. At least I think so. Oh, and for “field” think “corn field”, not “form field” :D

The array we create, will in this case be 1536x768 pixels. Adjust as desired.

Your first Taichi function

Right. Now it’s time to unlock the true power of Taichi. We’ll create a Taichi function to do the heavy lifting of calculating “a Julia pixel”. A very quick explanation of this fractal set would be something like this:

- For every pixel on your screen/display area

- Run “magic calculation” a number of times, up to a maximum of max_iterations

- If your calculated number heads off towards inifinite, paint the pixel black

- Otherwise paint the pixel in a color between black and white. 0.1 being almost fully black, 0.9 being almost fully white. 0.5 will be grey.

Super simplified. But you either roughly know how Julia works and don’t need the explanation, or you don’t and aren’t interested - in which case the above will be enough. Go check out the Wiki for the Julia set if you want to dive deepeer.

So anyway. For every pixel. And number of iterations. That means for our little set here, we’ll (worst case) need to do 1536 * 768 * 100 (max_iterations) calculations; 117.964.800 in total. ~120 million calculatios. To calculate one frame of Julia. And before this little example is done, we’ll be doing that at 60 FPS. So 7.077.888.000 calculations (worst case) will be required, every second. See where this is going? Yea. Even the best of modern CPUs will struggle to keep up with that.

So back to the Taichi function:

1 | @ti.func |

We instruct Python and Taichi that this is a Taichi Function by adding a decorator. Those of you familiar with c# will probably have used decorators in the past. Anyway, with that done, the syntax remains very Python’esque for the remainder. Inside the function we’re basically performing the Julia fractal calculation which involves calculating the squares of Imaginary Numbers. This is the process we repeat over and over, to determine the pixel colour.

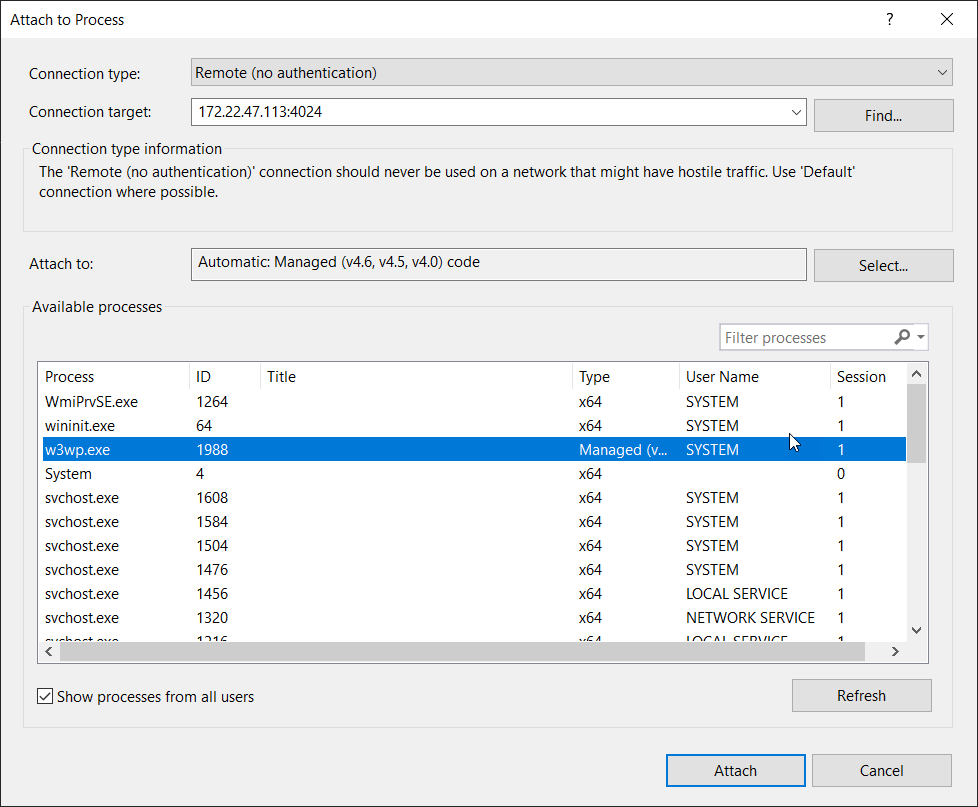

And here’s the thing. Behind the scenes, Taichi will be running this as what is known as a “Compute Shader”. This is a huge topic that I won’t be covering today but in a very simplified way, you can think of this as “code that will run in parallel on the GPU for each pixel on the screen”. Very simplified, but let’s move on.

Drawing the Julia set

With our function in place, it’s time to build our loop that will draw out every pixel we need. Once again we need a Taichi decorator to tell Python and Taichi what’s what. And as before, we expect this code to be running directly on the GPU, since there’s no way our CPU could ever keep up with what we’re doing here.

1 | @ti.kernel |

At the risk of disappointing, I won’t actually go into much detail here. The calculation itself is “well known” in the sense that it looks like any other Julia calculation you can find anywhere. Boiled down and distilled, this is what is going on:

- Set up the loop over our pixels “field”

- Initialise the Julia Imaginary Numbers

- Set up the iterations loop, and loop up until a maximum of max_iterations have run

- Run our complex_sql() Taichi Function inside the loop

- Put a colour back in our pixels array based on the number of iterations we actually ran

At the end of this, we now have an array of pixel colours sitting in VRAM/GPU memory. All we now have left to do, is get those pixels up on our screen somehow.

1 | gui = ti.GUI("Fun with Julia", res=(dim*2, dim)) |

Happy Sunday! :-)

Or Saturday. Or whatever.

That’s it, basically :-)

Needless to say I suppose, but this only scratches the very surface of what you can do with Taichi. I do encourage you to take a look at some of their examples or play around with some of the parameters in our example here.

And have some fun with it! :-)

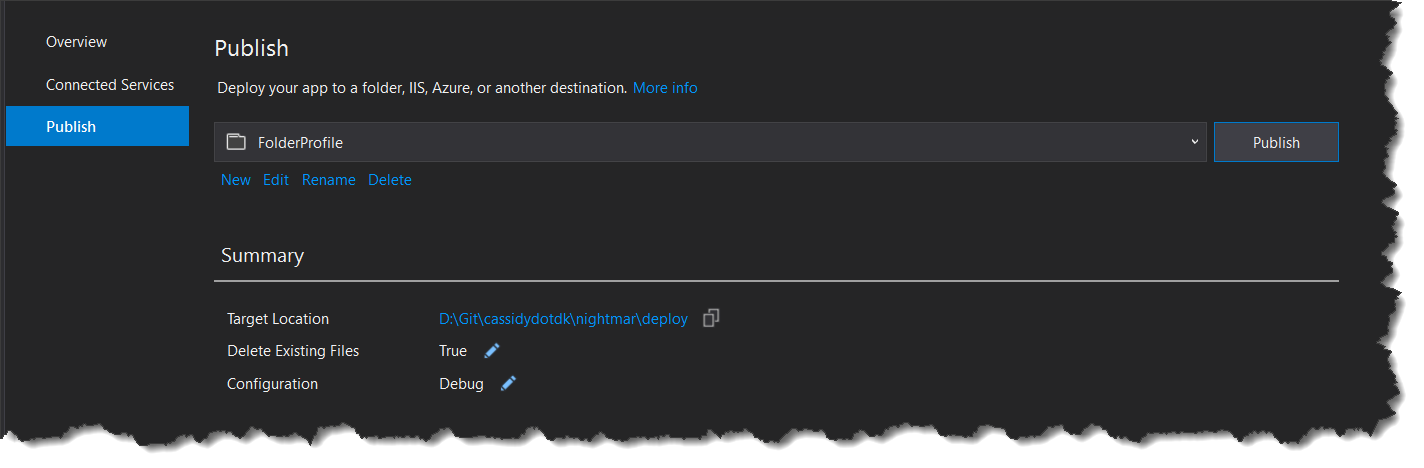

You can find this code in my Python Github repo